New Words

New Words is a speculative research project exploring the use of machine learning for the evolution of language. Large language models (LLM's) are fantastic at capturing our language as it currently is - but language is constantly evolving and adapting. Can machine learning help us create something truly new and unbounded by its training data?

A collaboration with Ryan Murdock

Background

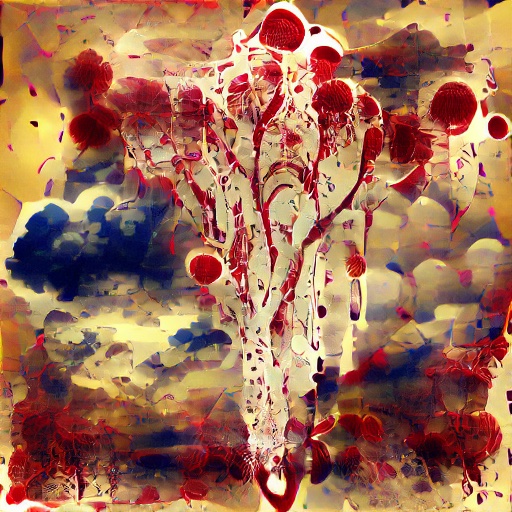

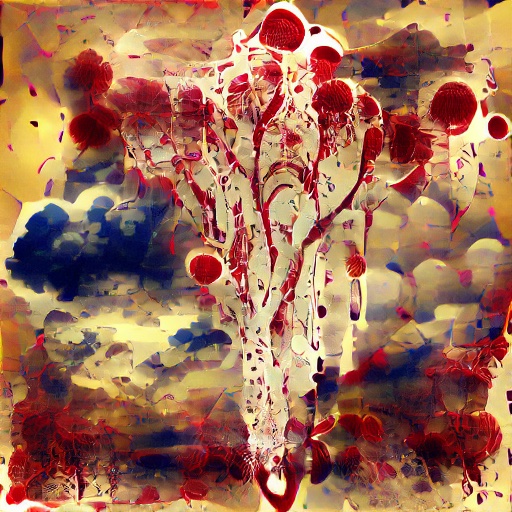

Ryan: My part of this project started with images, asking: how would a CLIP model caption an image given severe restraints on its vocabulary that were progressively tightened? Providing the constraint that no typical description could be applied turned into a sort of forced novelty search, testing how far these limits could be pushed while still producing a fitting caption. For example, the image below was titled 'Menstruhaunted Rainfall' (2021) using this method with the constraint that no obvious words like "tree" or "blood" that first come to mind could be used. As is often the case for humans, constraints can provide a clear path towards more interesting results.

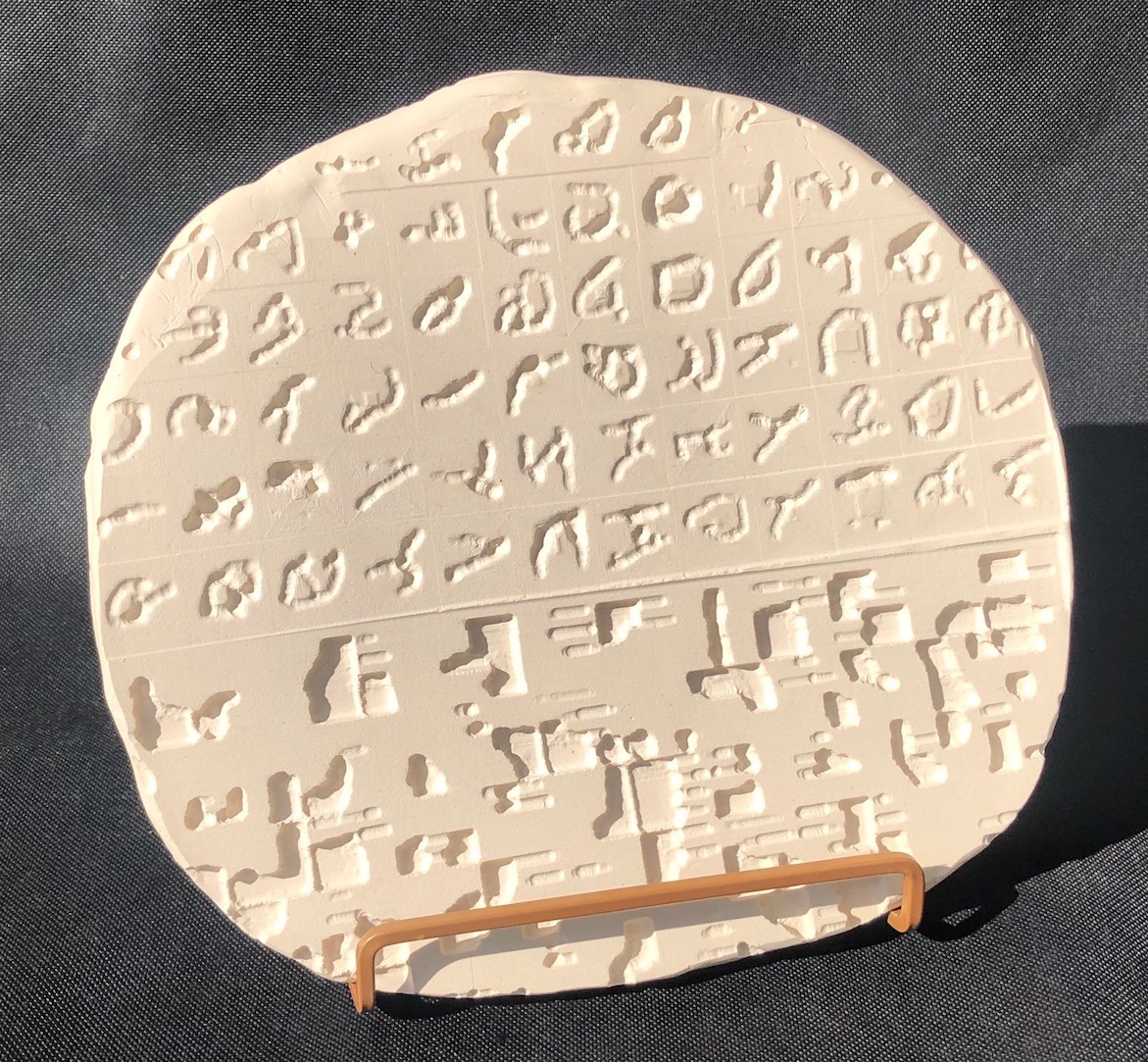

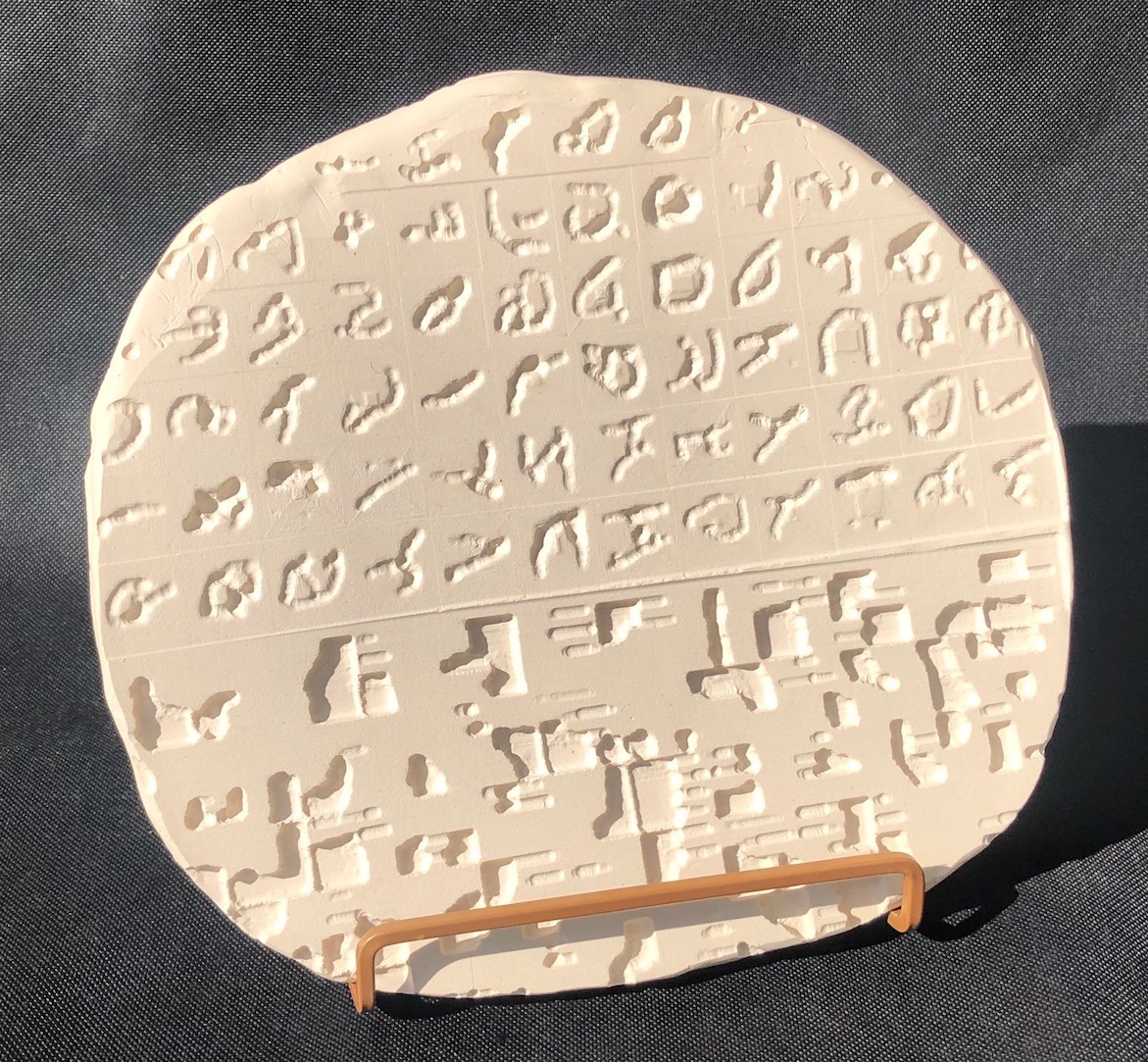

Joel: My earlier works - Dimensions of Dialogue (part1 and part2 - tablets) - were attempts to explore how machine learning networks could create new and emergent language artifacts via relatively simple rules and new ML architectures. This was part of a small wave of research exploring data-free generative ML. The rise of LLM's has many implications for language, but are they capable of truly new things or merely using the existing language frozen? When asked, ChatGPT is unable to invent new works beyond simple portmanteaus. This work is motivated by the organic & social evolution of language. Generative technology is at its best when it can inspire us with the possibilities of what can be.

Methodology

Part 1 - Discover candidate phrases or word spaces.

To generate candidate phrases we surveyed sparse areas of clip space and also manually selected phrases by exploring known words in other language or common english expressions without words.

Discovering empty clip spaces: All english nouns, verbs and adjectives were embedded into clip-text space to form the "word-set." Then, 1000 random "probe" embeddings were generated to form the "probe-set." Each probe was iteratively moved to the midpoint of its nearest neighbors in the word-set until convergence. Then, elements of the probe-set with the highest average distance to their nearest neighbors in the word set were selected and manually filtered. These words were the midpoints of large empty spaces.

Part2 - Generate

After we've found the phenomenon that needs word, we create a set of learnable parameters that each represent one word in CLIP's vocabulary for each token we want to learn. We then use the Gumbel Softmax Trick to map each set of parameters to a single possible CLIP token, and optimize the cosine similarity of the produced CLIP embedding and whatever other image/text embedding we like. We constrain the model to use words outside of the description and to only learn a few tokens without spaces in them.

Hard Prompts Made Easy by Wen et al. uses a similar approach but approximates gradients to obtain real word embeddings. Before Wen et al., RiversHaveWings & I separately found that the Gumbel Softmax trick works & separately decided not to release notebooks due to bias concerns.

Future work

Vector arithmetic: We can also do Word2Vec-styled addition and subtraction of embeddings, e.g. learning what maps in CLIP space from "humans" minus "beasts", producing the approximate difference between the two concepts (which is, optimistically per the system, "ethics").

Language games between language models: The original plan (out of scope for a one day hack) was to use dynamically generated conbersations between existing language model. Given multiple candidate words a language model would have to correctly guess which word was assigned to the given meaning out of a set of random words. This would ensure the new words were vaguely guessable. There is also the goal of modeling language evolution within simualtions of agent-based social networks.

Acknowledgments

This project was done while Joel & Ryan were residents at Stochastic Labs summer 2023 residency on AI and specifically during a sunday hackathon at replicate.